- S. Eisele, T. Eghtesad, K. Campanelli, P. Agrawal, A. Laszka, and A. Dubey, Safe and Private Forward-Trading Platform for Transactive Microgrids, ACM Trans. Cyber-Phys. Syst., vol. 5, no. 1, Jan. 2021.

@article{eisele2020Safe, author = {Eisele, Scott and Eghtesad, Taha and Campanelli, Keegan and Agrawal, Prakhar and Laszka, Aron and Dubey, Abhishek}, journal = {ACM Trans. Cyber-Phys. Syst.}, title = {Safe and Private Forward-Trading Platform for Transactive Microgrids}, year = {2021}, issn = {2378-962X}, month = jan, number = {1}, volume = {5}, address = {New York, NY, USA}, articleno = {8}, contribution = {lead}, doi = {10.1145/3403711}, issue_date = {January 2021}, keywords = {privacy, cyber-physical system, decentralized application, smart contract, transactive energy, Smart grid, distributed ledger, blockchain}, numpages = {29}, publisher = {Association for Computing Machinery}, tag = {decentralization, power}, url = {https://doi.org/10.1145/3403711} }Transactive microgrids have emerged as a transformative solution for the problems faced by distribution system operators due to an increase in the use of distributed energy resources and rapid growth in renewable energy generation. Transactive microgrids are tightly coupled cyber and physical systems, which require resilient and robust financial markets where transactions can be submitted and cleared, while ensuring that erroneous or malicious transactions cannot destabilize the grid. In this paper, we introduce TRANSAX, a novel decentralized platform for transactive microgrids. TRANSAX enables participants to trade in an energy futures market, which improves efficiency by finding feasible matches for energy trades, reducing the load on the distribution system operator. TRANSAX provides privacy to participants by anonymizing their trading activity using a distributed mixing service, while also enforcing constraints that limit trading activity based on safety requirements, such as keeping power flow below line capacity. We show that TRANSAX can satisfy the seemingly conflicting requirements of efficiency, safety, and privacy, and we demonstrate its performance using simulation results.

- C. Hartsell, S. Ramakrishna, A. Dubey, D. Stojcsics, N. Mahadevan, and G. Karsai, ReSonAte: A Runtime Risk Assessment Framework for Autonomous Systems, in 16th International Symposium on Software Engineering for Adaptive and Self-Managing Systems, SEAMS 2021, 2021.

@inproceedings{resonate2021, author = {Hartsell, Charles and Ramakrishna, Shreyas and Dubey, Abhishek and Stojcsics, Daniel and Mahadevan, Nag and Karsai, Gabor}, booktitle = {16th {International} Symposium on Software Engineering for Adaptive and Self-Managing Systems, {SEAMS} 2021}, title = {ReSonAte: A Runtime Risk Assessment Framework for Autonomous Systems}, year = {2021}, category = {selectiveconference}, contribution = {colab}, acceptance = {30}, project = {cps-middleware,cps-reliability}, tag = {ai4cps} }Autonomous Cyber-Physical Systems (CPSs) are often required to handle uncertainties and self-manage the system operation in response to problems and increasing risk in the operating paradigm. This risk may arise due to distribution shifts, environmental context, or failure of software or hardware components. Traditional techniques for risk assessment focus on design-time techniques such as hazard analysis, risk reduction, and assurance cases among others. However, these static, design time techniques do not consider the dynamic contexts and failures the systems face at runtime. We hypothesize that this requires a dynamic assurance approach that computes the likelihood of unsafe conditions or system failures considering the safety requirements, assumptions made at design time, past failures in a given operating context, and the likelihood of system component failures. We introduce the ReSonAte dynamic risk estimation framework for autonomous systems. ReSonAte reasons over Bow-Tie Diagrams (BTDs), which capture information about hazard propagation paths and control strategies. Our innovation is the extension of the BTD formalism with attributes for modeling the conditional relationships with the state of the system and environment. We also describe a technique for estimating these conditional relationships and equations for estimating risk-based on the state of the system and environment. To help with this process, we provide a scenario modeling procedure that can use the prior distributions of the scenes and threat conditions to generate the data required for estimating the conditional relationships. To improve scalability and reduce the amount of data required, this process considers each control strategy in isolation and composes several single-variate distributions into one complete multi-variate distribution for the control strategy in question. Lastly, we describe the effectiveness of our approach using two separate autonomous system simulations: CARLA and an unmanned underwater vehicle.

- S. Eisele, T. Eghtesad, N. Troutman, A. Laszka, and A. Dubey, Mechanisms for Outsourcing Computation via a Decentralized Market, in 14TH ACM International Conference on Distributed and Event Based Systems, 2020.

@inproceedings{eisele2020mechanisms, author = {Eisele, Scott and Eghtesad, Taha and Troutman, Nicholas and Laszka, Aron and Dubey, Abhishek}, booktitle = {14TH ACM International Conference on Distributed and Event Based Systems}, title = {Mechanisms for Outsourcing Computation via a Decentralized Market}, year = {2020}, acceptance = {25.5}, category = {selectiveconference}, contribution = {lead}, keywords = {transactive}, tag = {platform,decentralization} }As the number of personal computing and IoT devices grows rapidly, so does the amount of computational power that is available at the edge. Since many of these devices are often idle, there is a vast amount of computational power that is currently untapped, and which could be used for outsourcing computation. Existing solutions for harnessing this power, such as volunteer computing (e.g., BOINC), are centralized platforms in which a single organization or company can control participation and pricing. By contrast, an open market of computational resources, where resource owners and resource users trade directly with each other, could lead to greater participation and more competitive pricing. To provide an open market, we introduce MODiCuM, a decentralized system for outsourcing computation. MODiCuM deters participants from misbehaving-which is a key problem in decentralized systems-by resolving disputes via dedicated mediators and by imposing enforceable fines. However, unlike other decentralized outsourcing solutions, MODiCuM minimizes computational overhead since it does not require global trust in mediation results. We provide analytical results proving that MODiCuM can deter misbehavior, and we evaluate the overhead of MODiCuM using experimental results based on an implementation of our platform.

- S. Hasan, A. Dubey, G. Karsai, and X. Koutsoukos, A game-theoretic approach for power systems defense against dynamic cyber-attacks, International Journal of Electrical Power & Energy Systems, vol. 115, 2020.

@article{Hasan2020, author = {Hasan, Saqib and Dubey, Abhishek and Karsai, Gabor and Koutsoukos, Xenofon}, journal = {International Journal of Electrical Power \& Energy Systems}, title = {A game-theoretic approach for power systems defense against dynamic cyber-attacks}, year = {2020}, issn = {0142-0615}, volume = {115}, contribution = {colab}, doi = {https://doi.org/10.1016/j.ijepes.2019.105432}, file = {:Hasan2020-A_Game_Theoretic_Approach_for_Power_Systems_Defense_against_Dynamic_Cyber_Attacks.pdf:PDF}, keywords = {Cascading failures, Cyber-attack, Dynamic attack, Game theory, Resilience, Smart grid, Static attack, smartgrid, reliability}, project = {cps-reliability}, tag = {platform,power}, url = {http://www.sciencedirect.com/science/article/pii/S0142061519302807} }Technological advancements in today’s electrical grids give rise to new vulnerabilities and increase the potential attack surface for cyber-attacks that can severely affect the resilience of the grid. Cyber-attacks are increasing both in number as well as sophistication and these attacks can be strategically organized in chronological order (dynamic attacks), where they can be instantiated at different time instants. The chronological order of attacks enables us to uncover those attack combinations that can cause severe system damage but this concept remained unexplored due to the lack of dynamic attack models. Motivated by the idea, we consider a game-theoretic approach to design a new attacker-defender model for power systems. Here, the attacker can strategically identify the chronological order in which the critical substations and their protection assemblies can be attacked in order to maximize the overall system damage. However, the defender can intelligently identify the critical substations to protect such that the system damage can be minimized. We apply the developed algorithms to the IEEE-39 and 57 bus systems with finite attacker/defender budgets. Our results show the effectiveness of these models in improving the system resilience under dynamic attacks.

- G. Pettet, A. Mukhopadhyay, M. Kochenderfer, Y. Vorobeychik, and A. Dubey, On Algorithmic Decision Procedures in Emergency Response Systems in Smart and Connected Communities, in Proceedings of the 19th Conference on Autonomous Agents and MultiAgent Systems, AAMAS 2020, Auckland, New Zealand, 2020.

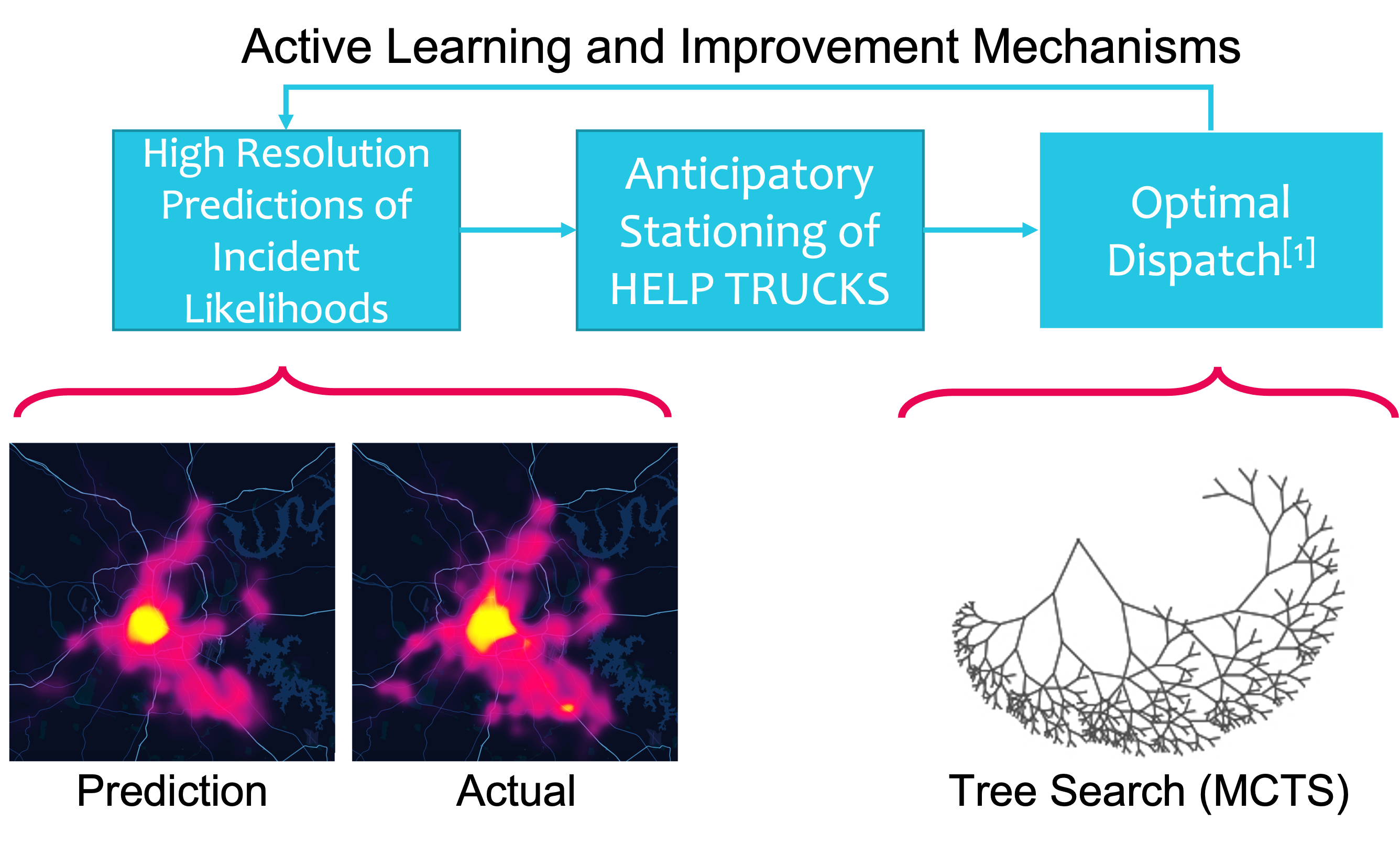

@inproceedings{Pettet2020, author = {Pettet, Geoffrey and Mukhopadhyay, Ayan and Kochenderfer, Mykel and Vorobeychik, Yevgeniy and Dubey, Abhishek}, booktitle = {Proceedings of the 19th Conference on Autonomous Agents and MultiAgent Systems, {AAMAS} 2020, Auckland, New Zealand}, title = {On Algorithmic Decision Procedures in Emergency Response Systems in Smart and Connected Communities}, year = {2020}, category = {selectiveconference}, contribution = {lead}, acceptance = {23}, keywords = {emergency, performance}, project = {smart-emergency-response,smart-cities}, tag = {ai4cps, decentralization,incident}, timestamp = {Wed, 17 Jan 2020 07:24:00 +0200} }Emergency Response Management (ERM) is a critical problem faced by communities across the globe. Despite its importance, it is common for ERM systems to follow myopic and straight-forward decision policies in the real world. Principled approaches to aid decision-making under uncertainty have been explored in this context but have failed to be accepted into real systems. We identify a key issue impeding their adoption — algorithmic approaches to emergency response focus on reactive, post-incident dispatching actions, i.e. optimally dispatching a responder after incidents occur. However, the critical nature of emergency response dictates that when an incident occurs, first responders always dispatch the closest available responder to the incident. We argue that the crucial period of planning for ERM systems is not post-incident, but between incidents. However, this is not a trivial planning problem - a major challenge with dynamically balancing the spatial distribution of responders is the complexity of the problem. An orthogonal problem in ERM systems is to plan under limited communication, which is particularly important in disaster scenarios that affect communication networks. We address both the problems by proposing two partially decentralized multi-agent planning algorithms that utilize heuristics and the structure of the dispatch problem. We evaluate our proposed approach using real-world data, and find that in several contexts, dynamic re-balancing the spatial distribution of emergency responders reduces both the average response time as well as its variance.

- S. Ramakrishna, C. Harstell, M. P. Burruss, G. Karsai, and A. Dubey, Dynamic-weighted simplex strategy for learning enabled cyber physical systems, Journal of Systems Architecture, vol. 111, p. 101760, 2020.

@article{ramakrishna2020dynamic, author = {Ramakrishna, Shreyas and Harstell, Charles and Burruss, Matthew P. and Karsai, Gabor and Dubey, Abhishek}, journal = {Journal of Systems Architecture}, title = {Dynamic-weighted simplex strategy for learning enabled cyber physical systems}, year = {2020}, issn = {1383-7621}, pages = {101760}, volume = {111}, contribution = {lead}, doi = {https://doi.org/10.1016/j.sysarc.2020.101760}, keywords = {Convolutional Neural Networks, Learning Enabled Components, Reinforcement Learning, Simplex Architecture}, tag = {a14cps}, url = {https://www.sciencedirect.com/science/article/pii/S1383762120300540} }Cyber Physical Systems (CPS) have increasingly started using Learning Enabled Components (LECs) for performing perception-based control tasks. The simple design approach, and their capability to continuously learn has led to their widespread use in different autonomous applications. Despite their simplicity and impressive capabilities, these components are difficult to assure, which makes their use challenging. The problem of assuring CPS with untrusted controllers has been achieved using the Simplex Architecture. This architecture integrates the system to be assured with a safe controller and provides a decision logic to switch between the decisions of these controllers. However, the key challenges in using the Simplex Architecture are: (1) designing an effective decision logic, and (2) sudden transitions between controller decisions lead to inconsistent system performance. To address these research challenges, we make three key contributions: (1) dynamic-weighted simplex strategy – we introduce “weighted simplex strategy” as the weighted ensemble extension of the classical Simplex Architecture. We then provide a reinforcement learning based mechanism to find dynamic ensemble weights, (2) middleware framework – we design a framework that allows the use of the dynamic-weighted simplex strategy, and provides a resource manager to monitor the computational resources, and (3) hardware testbed – we design a remote-controlled car testbed called DeepNNCar to test and demonstrate the aforementioned key concepts. Using the hardware, we show that the dynamic-weighted simplex strategy has 60% fewer out-of-track occurrences (soft constraint violations), while demonstrating higher optimized speed (performance) of 0.4 m/s during indoor driving than the original LEC driven system.

- F. Sun, A. Dubey, J. White, and A. Gokhale, Transit-hub: a smart public transportation decision support system with multi-timescale analytical services, Cluster Computing, vol. 22, no. Suppl 1, pp. 2239–2254, Jan. 2019.

@article{Sun2019, author = {Sun, Fangzhou and Dubey, Abhishek and White, Jules and Gokhale, Aniruddha}, journal = {Cluster Computing}, title = {Transit-hub: a smart public transportation decision support system with multi-timescale analytical services}, year = {2019}, month = jan, number = {Suppl 1}, pages = {2239--2254}, volume = {22}, bibsource = {dblp computer science bibliography, https://dblp.org}, biburl = {https://dblp.org/rec/bib/journals/cluster/SunDWG19}, contribution = {lead}, doi = {10.1007/s10586-018-1708-z}, file = {:Sun2019-Transit-hub_a_smart_public_transportation_decision_support_system_with_multi-timescale_analytical_services.pdf:PDF}, keywords = {transit}, project = {smart-cities,smart-transit}, tag = {transit}, timestamp = {Wed, 21 Aug 2019 01:00:00 +0200}, url = {https://doi.org/10.1007/s10586-018-1708-z} }Public transit is a critical component of a smart and connected community. As such, citizens expect and require accurate information about real-time arrival/departures of transportation assets. As transit agencies enable large-scale integration of real-time sensors and support back-end data-driven decision support systems, the dynamic data-driven applications systems (DDDAS) paradigm becomes a promising approach to make the system smarter by providing online model learning and multi-time scale analytics as part of the decision support system that is used in the DDDAS feedback loop. In this paper, we describe a system in use in Nashville and illustrate the analytic methods developed by our team. These methods use both historical as well as real-time streaming data for online bus arrival prediction. The historical data is used to build classifiers that enable us to create expected performance models as well as identify anomalies. These classifiers can be used to provide schedule adjustment feedback to the metro transit authority. We also show how these analytics services can be packaged into modular, distributed and resilient micro-services that can be deployed on both cloud back ends as well as edge computing resources.

- C. Hartsell, N. Mahadevan, S. Ramakrishna, A. Dubey, T. Bapty, T. T. Johnson, X. D. Koutsoukos, J. Sztipanovits, and G. Karsai, CPS Design with Learning-Enabled Components: A Case Study, in Proceedings of the 30th International Workshop on Rapid System Prototyping, RSP 2019, New York, NY, USA, October 17-18, 2019, 2019, pp. 57–63.

@inproceedings{Hartsell2019b, author = {Hartsell, Charles and Mahadevan, Nagabhushan and Ramakrishna, Shreyas and Dubey, Abhishek and Bapty, Theodore and Johnson, Taylor T. and Koutsoukos, Xenofon D. and Sztipanovits, Janos and Karsai, Gabor}, booktitle = {Proceedings of the 30th International Workshop on Rapid System Prototyping, {RSP} 2019, New York, NY, USA, October 17-18, 2019}, title = {{CPS} Design with Learning-Enabled Components: {A} Case Study}, year = {2019}, pages = {57--63}, bibsource = {dblp computer science bibliography, https://dblp.org}, biburl = {https://dblp.org/rec/bib/conf/rsp/HartsellMRDBJKS19}, category = {selectiveconference}, contribution = {colab}, doi = {10.1145/3339985.3358491}, file = {:Hartsell2019b-CPS_Design_with_Learning-Enabled_Components_A_Case_Study.pdf:PDF}, keywords = {assurance}, project = {cps-autonomy}, tag = {ai4cps}, timestamp = {Thu, 28 Nov 2019 12:43:50 +0100}, url = {https://doi.org/10.1145/3339985.3358491} }Cyber-Physical Systems (CPS) are used in many applications where they must perform complex tasks with a high degree of autonomy in uncertain environments. Traditional design flows based on domain knowledge and analytical models are often impractical for tasks such as perception, planning in uncertain environments, control with ill-defined objectives, etc. Machine learning based techniques have demonstrated good performance for such difficult tasks, leading to the introduction of Learning-Enabled Components (LEC) in CPS. Model based design techniques have been successful in the development of traditional CPS, and toolchains which apply these techniques to CPS with LECs are being actively developed. As LECs are critically dependent on training and data, one of the key challenges is to build design automation for them. In this paper, we examine the development of an autonomous Unmanned Underwater Vehicle (UUV) using the Assurance-based Learning-enabled Cyber-physical systems (ALC) Toolchain. Each stage of the development cycle is described including architectural modeling, data collection, LEC training, LEC evaluation and verification, and system-level assurance.

- S. Pradhan, A. Dubey, S. Khare, S. Nannapaneni, A. Gokhale, S. Mahadevan, D. C. Schmidt, and M. Lehofer, CHARIOT: Goal-Driven Orchestration Middleware for Resilient IoT Systems, ACM Trans. Cyber-Phys. Syst., vol. 2, no. 3, June 2018.

@article{Pradhan2018, author = {Pradhan, Subhav and Dubey, Abhishek and Khare, Shweta and Nannapaneni, Saideep and Gokhale, Aniruddha and Mahadevan, Sankaran and Schmidt, Douglas C. and Lehofer, Martin}, journal = {ACM Trans. Cyber-Phys. Syst.}, title = {CHARIOT: Goal-Driven Orchestration Middleware for Resilient IoT Systems}, year = {2018}, issn = {2378-962X}, month = jun, number = {3}, volume = {2}, address = {New York, NY, USA}, articleno = {16}, contribution = {lead}, doi = {10.1145/3134844}, issue_date = {July 2018}, keywords = {resilience at the edge, orchestration middleware, cyber-physical systems, Autonomous management}, numpages = {37}, project = {cps-middleware,cps-reliability}, publisher = {Association for Computing Machinery}, tag = {ai4cps,platform}, url = {https://doi.org/10.1145/3134844} }An emerging trend in Internet of Things (IoT) applications is to move the computation (cyber) closer to the source of the data (physical). This paradigm is often referred to as edge computing. If edge resources are pooled together, they can be used as decentralized shared resources for IoT applications, providing increased capacity to scale up computations and minimize end-to-end latency. Managing applications on these edge resources is hard, however, due to their remote, distributed, and (possibly) dynamic nature, which necessitates autonomous management mechanisms that facilitate application deployment, failure avoidance, failure management, and incremental updates. To address these needs, we present CHARIOT, which is orchestration middleware capable of autonomously managing IoT systems consisting of edge resources and applications.CHARIOT implements a three-layer architecture. The topmost layer comprises a system description language, the middle layer comprises a persistent data storage layer and the corresponding schema to store system information, and the bottom layer comprises a management engine that uses information stored persistently to formulate constraints that encode system properties and requirements, thereby enabling the use of satisfiability modulo theory solvers to compute optimal system (re)configurations dynamically at runtime. This article describes the structure and functionality of CHARIOT and evaluates its efficacy as the basis for a smart parking system case study that uses sensors to manage parking spaces.

- Garcı́a-Valls Marisol, A. Dubey, and V. J. Botti, Introducing the new paradigm of Social Dispersed Computing: Applications, Technologies and Challenges, Journal of Systems Architecture - Embedded Systems Design, vol. 91, pp. 83–102, 2018.

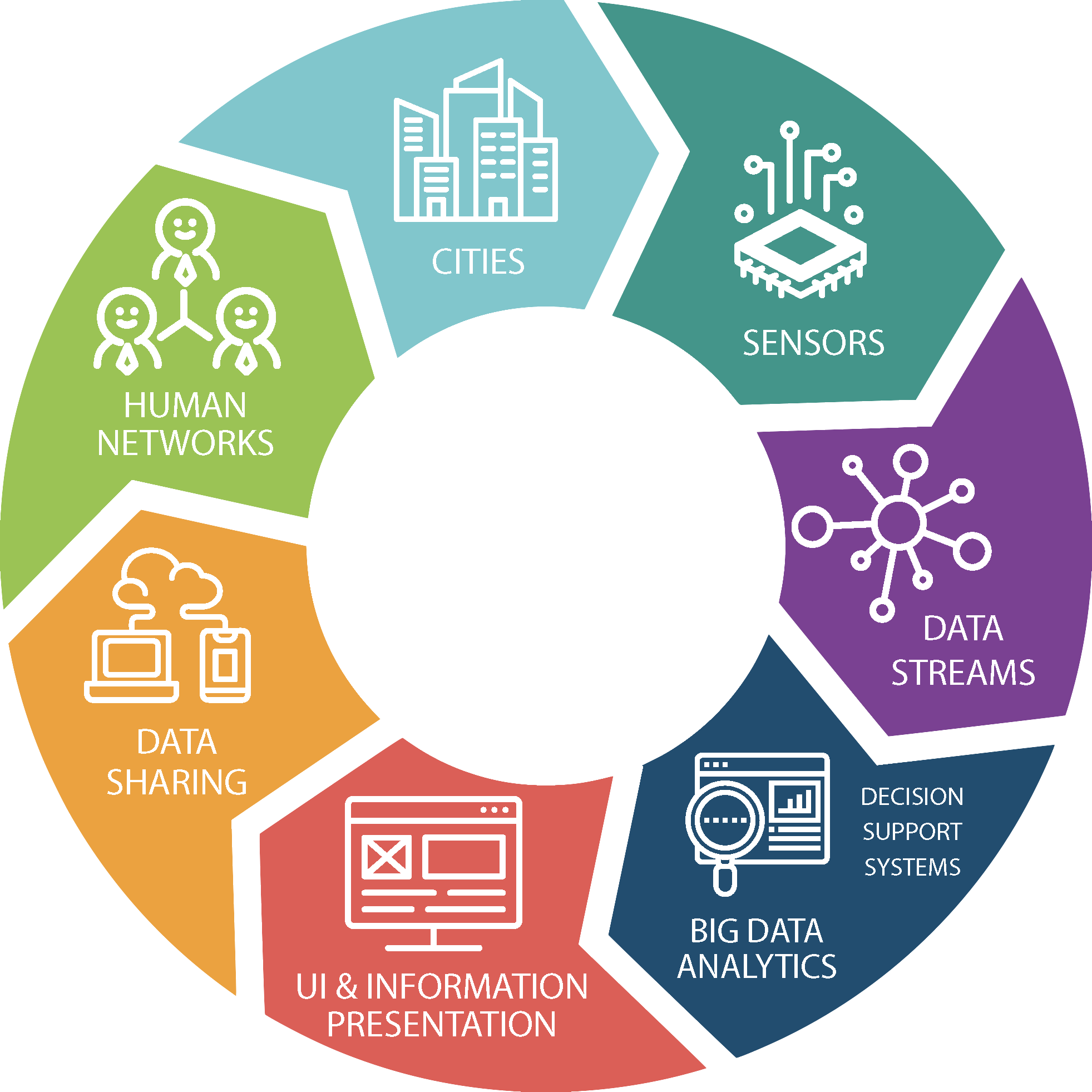

@article{GarciaValls2018, author = {Garc{\'{\i}}a{-}Valls, Marisol and Dubey, Abhishek and Botti, Vicent J.}, journal = {Journal of Systems Architecture - Embedded Systems Design}, title = {Introducing the new paradigm of Social Dispersed Computing: Applications, Technologies and Challenges}, year = {2018}, pages = {83--102}, volume = {91}, bibsource = {dblp computer science bibliography, https://dblp.org}, biburl = {https://dblp.org/rec/bib/journals/jsa/Garcia-VallsDB18}, contribution = {colab}, doi = {10.1016/j.sysarc.2018.05.007}, file = {:Garcia-Valls2018-Introducing_the_new_paradigm_of_Social_Dispersed_Computing_Applications_Technologies_and_Challenges.pdf:PDF}, keywords = {middleware}, project = {cps-middleware}, tag = {platform,decentralization}, timestamp = {Mon, 16 Sep 2019 01:00:00 +0200}, url = {https://doi.org/10.1016/j.sysarc.2018.05.007} }If last decade viewed computational services as a utilitythen surely this decade has transformed computation into a commodity. Computation is now progressively integrated into the physical networks in a seamless way that enables cyber-physical systems (CPS) and the Internet of Things (IoT) meet their latency requirements. Similar to the concept of “platform as a service” or “software as a service”, both cloudlets and fog computing have found their own use cases. Edge devices (that we call end or user devices for disambiguation) play the role of personal computers, dedicated to a user and to a set of correlated applications. In this new scenario, the boundaries between the network node, the sensor, and the actuator are blurring, driven primarily by the computation power of IoT nodes like single board computers and the smartphones. The bigger data generated in this type of networks needs clever, scalable, and possibly decentralized computing solutions that can scale independently as required. Any node can be seen as part of a graph, with the capacity to serve as a computing or network router node, or both. Complex applications can possibly be distributed over this graph or network of nodes to improve the overall performance like the amount of data processed over time. In this paper, we identify this new computing paradigm that we call Social Dispersed Computing, analyzing key themes in it that includes a new outlook on its relation to agent based applications. We architect this new paradigm by providing supportive application examples that include next generation electrical energy distribution networks, next generation mobility services for transportation, and applications for distributed analysis and identification of non-recurring traffic congestion in cities. The paper analyzes the existing computing paradigms (e.g., cloud, fog, edge, mobile edge, social, etc.), solving the ambiguity of their definitions; and analyzes and discusses the relevant foundational software technologies, the remaining challenges, and research opportunities.

- D. Balasubramanian, A. Dubey, W. Otte, T. Levendovszky, A. S. Gokhale, P. S. Kumar, W. Emfinger, and G. Karsai, DREMS ML: A wide spectrum architecture design language for distributed computing platforms, Sci. Comput. Program., vol. 106, pp. 3–29, 2015.

@article{Balasubramanian2015, author = {Balasubramanian, Daniel and Dubey, Abhishek and Otte, William and Levendovszky, Tihamer and Gokhale, Aniruddha S. and Kumar, Pranav Srinivas and Emfinger, William and Karsai, Gabor}, journal = {Sci. Comput. Program.}, title = {{DREMS} {ML:} {A} wide spectrum architecture design language for distributed computing platforms}, year = {2015}, pages = {3--29}, volume = {106}, bibsource = {dblp computer science bibliography, https://dblp.org}, biburl = {https://dblp.org/rec/bib/journals/scp/Balasubramanian15}, contribution = {colab}, doi = {10.1016/j.scico.2015.04.002}, file = {:Balasubramanian2015-DREMS_ML_A_wide_spectrum_architecture_design_language_for_distributed_computing_platforms.pdf:PDF}, keywords = {middleware}, project = {cps-middleware}, tag = {platform}, timestamp = {Sat, 27 May 2017 01:00:00 +0200}, url = {https://doi.org/10.1016/j.scico.2015.04.002} }Complex sensing, processing and control applications running on distributed platforms are difficult to design, develop, analyze, integrate, deploy and operate, especially if resource constraints, fault tolerance and security issues are to be addressed. While technology exists today for engineering distributed, real-time component-based applications, many problems remain unsolved by existing tools. Model-driven development techniques are powerful, but there are very few existing and complete tool chains that offer an end-to-end solution to developers, from design to deployment. There is a need for an integrated model-driven development environment that addresses all phases of application lifecycle including design, development, verification, analysis, integration, deployment, operation and maintenance, with supporting automation in every phase. Arguably, a centerpiece of such a model-driven environment is the modeling language. To that end, this paper presents a wide-spectrum architecture design language called DREMS ML that itself is an integrated collection of individual domain-specific sub-languages. We claim that the language promotes “correct-by-construction” software development and integration by supporting each individual phase of the application lifecycle. Using a case study, we demonstrate how the design of DREMS ML impacts the development of embedded systems.

- T. Levendovszky, A. Dubey, W. Otte, D. Balasubramanian, A. Coglio, S. Nyako, W. Emfinger, P. S. Kumar, A. S. Gokhale, and G. Karsai, Distributed Real-Time Managed Systems: A Model-Driven Distributed Secure Information Architecture Platform for Managed Embedded Systems, IEEE Software, vol. 31, no. 2, pp. 62–69, 2014.

@article{Levendovszky2014, author = {Levendovszky, Tihamer and Dubey, Abhishek and Otte, William and Balasubramanian, Daniel and Coglio, Alessandro and Nyako, Sandor and Emfinger, William and Kumar, Pranav Srinivas and Gokhale, Aniruddha S. and Karsai, Gabor}, journal = {{IEEE} Software}, title = {Distributed Real-Time Managed Systems: {A} Model-Driven Distributed Secure Information Architecture Platform for Managed Embedded Systems}, year = {2014}, number = {2}, pages = {62--69}, volume = {31}, bibsource = {dblp computer science bibliography, https://dblp.org}, biburl = {https://dblp.org/rec/bib/journals/software/LevendovszkyDOBCNEKGK14}, contribution = {colab}, doi = {10.1109/MS.2013.143}, file = {:Levendovszky2014-Distributed_Real_Time_Managed_Systems.pdf:PDF}, keywords = {middleware}, project = {cps-middleware,cps-reliability}, tag = {platform}, timestamp = {Thu, 18 May 2017 01:00:00 +0200}, url = {https://doi.org/10.1109/MS.2013.143} }Architecting software for a cloud computing platform built from mobile embedded devices incurs many challenges that aren’t present in traditional cloud computing. Both effectively managing constrained resources and isolating applications without adverse performance effects are needed. A practical design- and runtime solution incorporates modern software development practices and technologies along with novel approaches to address these challenges. The patterns and principles manifested in this system can potentially serve as guidelines for current and future practitioners in this field.

- N. Mahadevan, A. Dubey, and G. Karsai, Application of software health management techniques, in 2011 ICSE Symposium on Software Engineering for Adaptive and Self-Managing Systems, SEAMS 2011, Waikiki, Honolulu , HI, USA, May 23-24, 2011, 2011, pp. 1–10.

@inproceedings{Mahadevan2011, author = {Mahadevan, Nagabhushan and Dubey, Abhishek and Karsai, Gabor}, booktitle = {2011 {ICSE} Symposium on Software Engineering for Adaptive and Self-Managing Systems, {SEAMS} 2011, Waikiki, Honolulu , HI, USA, May 23-24, 2011}, title = {Application of software health management techniques}, year = {2011}, acceptance = {27}, pages = {1--10}, bibsource = {dblp computer science bibliography, https://dblp.org}, biburl = {https://dblp.org/rec/bib/conf/icse/MahadevanDK11}, category = {selectiveconference}, contribution = {colab}, doi = {10.1145/1988008.1988010}, file = {:Mahadevan2011-Application_of_software_health_management_techniques.pdf:PDF}, keywords = {performance, reliability}, project = {cps-middleware,cps-reliability}, tag = {platform}, timestamp = {Tue, 06 Nov 2018 00:00:00 +0100}, url = {https://doi.org/10.1145/1988008.1988010} }The growing complexity of software used in large-scale, safety critical cyber-physical systems makes it increasingly difficult to expose and hence correct all potential defects. There is a need to augment the existing fault tolerance methodologies with new approaches that address latent software defects exposed at runtime. This paper describes an approach that borrows and adapts traditional ‘System Health Management’ techniques to improve software dependability through simple formal specification of runtime monitoring, diagnosis, and mitigation strategies. The two-level approach to health management at the component and system level is demonstrated on a simulated case study of an Air Data Inertial Reference Unit (ADIRU). An ADIRU was categorized as the primary failure source for the in-flight upset caused in the Malaysian Air flight 124 over Perth, Australia in 2005.

- N. Roy, A. Dubey, and A. S. Gokhale, Efficient Autoscaling in the Cloud Using Predictive Models for Workload Forecasting, in IEEE International Conference on Cloud Computing, CLOUD 2011, Washington, DC, USA, 4-9 July, 2011, 2011, pp. 500–507.

@inproceedings{Roy2011a, author = {Roy, Nilabja and Dubey, Abhishek and Gokhale, Aniruddha S.}, booktitle = {{IEEE} International Conference on Cloud Computing, {CLOUD} 2011, Washington, DC, USA, 4-9 July, 2011}, title = {Efficient Autoscaling in the Cloud Using Predictive Models for Workload Forecasting}, year = {2011}, acceptance = {22.4}, pages = {500--507}, bibsource = {dblp computer science bibliography, https://dblp.org}, biburl = {https://dblp.org/rec/bib/conf/IEEEcloud/RoyDG11}, category = {selectiveconference}, contribution = {colab}, doi = {10.1109/CLOUD.2011.42}, file = {:Roy2011a-Efficient_Autoscaling_in_the_Cloud_Using_Predictive_Models_for_Workload_Forecasting.pdf:PDF}, keywords = {performance}, project = {cps-middleware}, tag = {platform}, timestamp = {Wed, 16 Oct 2019 14:14:54 +0200}, url = {https://doi.org/10.1109/CLOUD.2011.42} }Large-scale component-based enterprise applications that leverage Cloud resources expect Quality of Service(QoS) guarantees in accordance with service level agreements between the customer and service providers. In the context of Cloud computing, auto scaling mechanisms hold the promise of assuring QoS properties to the applications while simultaneously making efficient use of resources and keeping operational costs low for the service providers. Despite the perceived advantages of auto scaling, realizing the full potential of auto scaling is hard due to multiple challenges stemming from the need to precisely estimate resource usage in the face of significant variability in client workload patterns. This paper makes three contributions to overcome the general lack of effective techniques for workload forecasting and optimal resource allocation. First, it discusses the challenges involved in auto scaling in the cloud. Second, it develops a model-predictive algorithm for workload forecasting that is used for resource auto scaling. Finally, empirical results are provided that demonstrate that resources can be allocated and deal located by our algorithm in a way that satisfies both the application QoS while keeping operational costs low.

About us

The ScopeLab Research Group at the Institute for Software Integrated Systems (ISIS), Vanderbilt University conducts research across cyber-physical systems (CPS), with a primary focus on transportation and smart grids. We explore fundamental challenges in decision-making, including scalability, non-stationarity, and neuro-symbolic computing, to develop intelligent, adaptive, and secure CPS. In transportation, our work spans public transit optimization, multi-modal transit system design, emergency response and incident detection, while in smart grids, we focus on anomaly detection and fault isolation, electric vehicle and grid integration, and transactive energy systems. Beyond these domains, we strive to identify core decision-making challenges that impact CPS more broadly. By integrating computational intelligence with real-world applications, we tackle critical challenges in designing resilient, efficient, and secure systems for smart and connected environments. Visit SmartTransit.ai to learn more about our transit projects and StatResp.ai for our emergency response initiatives. Our work has been sponsored in part by NSF, NASA, DOE, and ARPA-E. AFRL, DARPA, TNECD, Nissan, Siemens, Cisco, and IBM across the years. The lab is led by Prof. Abhishek Dubey. Our publications can be found on the publications page.

Recent Updates

- Decemeber 2025. Rishav’s paper CONSENT A Negotiation Framework for Leveraging User Flexibility in Vehicle-to-Building Charging under Uncertainty has been accepted at the 25th International Conference on Autonomous Agents and Multi-Agent Systems.

- September 2025. Yunuo’s paper ESCORT Efficient Stein-variational and Sliced Consistency-Optimized Temporal Belief Representation for POMDPs has been accepted at the 39th Conference on Neural Information Processing Systems (NeurIPS’25).

- September 2025. Nathaniel’s paper NS-Gym Open-Source Simulation Environments and Benchmarks for Non-Stationary Markov Decision Processes has been accepted at the 39th Conference on Neural Information Processing Systems (NeurIPS’25).

- May 2025. Ammar’s paper TRACE Traffic Response Anomaly Capture Engine for Localization of Traffic Incidents has been nominated as a best paper finalist at the 2025 IEEE International Conference on Smart Computing (SMARTCOMP).

- May 2025. Fangqi’s paper Reinforcement Learning-based Approach for Vehicle-to-Building Charging with Heterogeneous Agents and Long Term Rewards has been nominated as a best paper finalist at the 24th International Conference on Autonomous Agents and Multi-Agent Systems.

- May 2024. JP’s paper An Online Approach to Solving Public Transit Stationing and Dispatch Problem has been presented with the best paper award at the 15th ACM/IEEE International Conference on Cyber-Physical Systems.

Research Verticals

Smart Incident Response

Planning and preparation in anticipation of urban emergency incidents are critical because of the alarming extent of the damage such incidents cause as well as the sheer frequency of their occurrence. Incident response is typically optimized as part of designing ERS pipelines but can have a high variance depending upon the location of the first responders, other incidents in the call chain, the severity of the call, traffic conditions, and weather conditions. Our research on algorithmic approaches to ERS spanning the past six years has developed proactive stationing and principled dispatch strategies to reduce the overall response times. The system depends upon incident data, temporal data (weather and traffic), and static roadway data and has to contend with changes in incident distributions and communication failures.

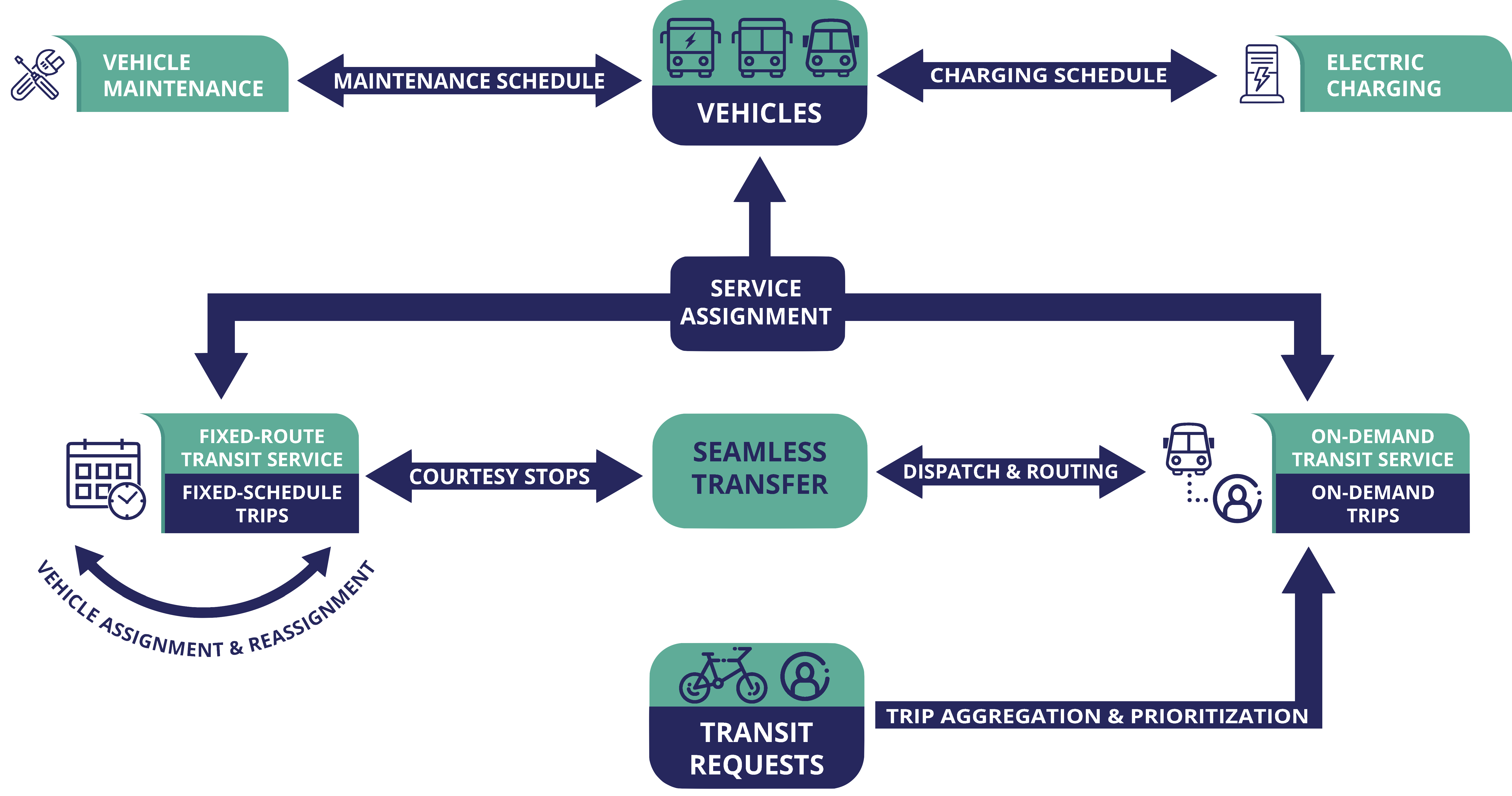

Smart Mobility

Transit Agencies struggle to maintain transit accessibility with reduced resources, changing ridership patterns, vehicle capacity constraints. We have been working for the last several years to design AI-based scheduling systems to solve the problem of allocating vehicles and drivers to transit services, scheduling vehicle maintenance, and electric-vehicle charging, proactive stationing, and dispatch of vehicles for fixed-line service to mitigate unscheduled maintenance and unmet transit demand, aggregating on-demand transit requests, and dispatching and routing on-demand vehicles. Similar to the incident response, the decision support systems face key challenges - environments are non-stationary and difficult to predict due to human factors and complex processes affecting transit demand and traffic as well as unscheduled maintenance and accidents; simulations are expensive and complex as city-scale simulations need to consider millions of individuals and vehicles.

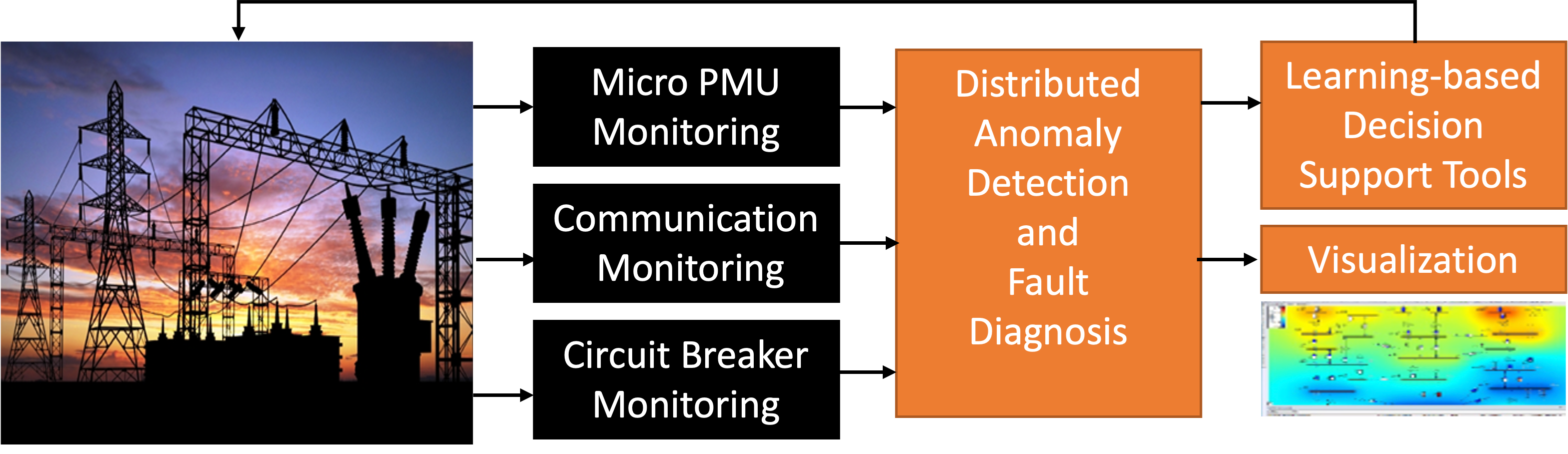

Smart Power

Resilience, scalability and safety of operations is critical for power grid. Ensuring that the system operates resiliently while handling the challenges of both component failures, environmental uncertainty and adversarial attacks is not easy. The challenge in this domain include data corruption, failure and misoperation of protection equipment, and the possibility of misclassification of a fault by the AI system. System integration and operation of the grid remains open challenges. Further, the emerging trend in this domain is networked microgrids that can island or be connected together to respond to adversities. However, dynamic formation of networked microgrids for heterogeneous components is not a solved problem. System integrators must often put together a microgrid from available components that communicate different information, at different rates, using different protocols. Due to variations in the microgrid architectures and their generation and load mix, each microgrid solution is customized and site-specific.

Research Horizontals

AI for Cyber-Physical Systems

The motivation for this research area is the use of Artificial Intelligence (AI) in cyber-physical systems (CPS). The advantage of AI methods is their ability to handle high-dimensional state-space and learn decision procedures/control algorithms from data rather than models. This is important as real-world state spaces and environments are often complex, dynamic, and hard to model. Despite their impressive capability, using them in CPS is challenging because of two reasons, (1) it is likely that the data and operating assumptions made during the design of the system are not complete, and the system may have failures at runtime; and (2) safety and assurance case development of systems using these components is complicated because traditional design methods focus only on training, testing and deploying individual components of the system and do not focus on the integrated system level assurance. We co-design system architecture as well as novel state estimators, predictors, and decision procedures for the different research verticals we investigate - proactive emergency response systems, transit management systems, and electric power grids. As we develop these systems and methods, we consider the societal context in which they are being used and investigate principles that allow us to reason about resilience, assurance, and fault diagnostics for AI-CPS.

Resilient Design and Operation of Complex Cyber-Physical Systems

Cyber-Physical Systems encompass all modern engineered systems, including smart transit, smart emergency response, smart grid. The big issue in these systems is the construction and operation of the system in a safe and efficient manner. There have been many different approaches taken by the research community over the years. The approach of this lab has been to focus on component-based software engineering (CBSE) efforts for these systems. The guiding principles of CBSE are interfaces with well defined execution models, compositional semantics and analysis. However, there are a number of challenges that have to be resolved (a) performance management, (b) modularization and adaptation of the design as the requirements and environment changes, (c) safe and secure design of the system itself and ensuring that new design and component additions can be compositionally analyzed and operated during the life cycle of the system, (d) fault diagnostics and failure isolation to detect and triage problems onlines and (e) reconfiguration and recovery to dynamically adapt to failures and environmental changes to ensure the safe completion of mission tasks.

Decentralized Operations for Cyber-Physical Systems

One of the fundamental focus of research in our lab is the design and development of algorithms and framework to enable decentralized operations in large scale cyber-physical systems. We note that a number of these systems require multiple entities and stakeholders to participate and as such the systems are vulnerable to data and operational disruptions, which are common in centralized architectures. These challenges have led to increasing focus on SCC platforms that provide participants the capability to not only exchange data and services in a decentralized and perhaps anonymous manner, but also provide them with the capability to preserve an immutable and auditable record of all transactions in the system. Such transactive platforms are actively being suggested for use in Healthcare, Smart Energy Systems, and Smart Transportation Systems. These platforms can provide support for privacy-preserving and anonymizing techniques, such as differential privacy, fully homomorphic encryption, and mixing. Further, the immutable nature of records and event chronology in these platforms provides high rigor and auditability. Lastly, the decentralized nature of these platforms ensures that any adversary needs to compromise a large number of node to take control of the system.

Active Projects

Robust Online Decision Procedures for Societal Scale CPS

This career grant studies novel methods for designing sequential, non-myopic, online decision procedures for societal-scale cyber-physical systems such as public transit, emergency response systems, and power grid, forming the critical infrastructure of our communities. Online Optimization of these systems entails taking actions that consider the tightly integrated spatial, temporal, and human dimensions while accounting for uncertainty caused due to changes in the system and the environment. For example, emergency response management systems (ERM) operators must optimally dispatch ambulances and help trucks to respond to incidents while accounting for traffic pattern changes and road closures. Similarly, public transportation agencies operating electric vehicles must manage and schedule the vehicles considering the expected travel demand while deciding on charging schedules considering the overall grid load. The project’s proposed approach focuses on designing a modular and reusable online decision-making pipeline that combines the advantages of online planning methods, such as Monte-Carlo Tree Search, with offline policy learning methods, such as reinforcement learning, promising to provide faster convergence and robustness to changes in the environment. The research activities of the proposed project are complemented by educational activities focusing on designing cloud-based teaching environments that can help students and operators with prerequisite domain and statistical knowledge to design, manage, and experiment with decision procedures.

Optimizing Fixed Line and On-demand Services for Public Transportation

We are developing algorithms to perform system-wide optimization, (the microtransit, fixed line and paratransit) focusing on three objectives - minimizing energy per passenger per mile, minimizing total energy consumed, and maximizing the percentage of daily trips served by public transit. While it is possible to optimize these decisions separately as prior work has done, integrated optimization can lead to significantly better service (e.g., synchronizing flexible courtesy stops with microtransit dispatch for easy transfer). However, this is hard due to uncertainty of future demand, traffic conditions etc. We address these challenges using state-of-the-art artificial intelligence, machine learning, and data-driven optimization techniques. Deep reinforcement learning (DRL) and Monte-Carlo tree search form the core of our operational optimization, which is supported by data-driven optimization for offline planning and by machine learning techniques for predicting demand, maintenance requirements, and traffic conditions. A key aspect of this research area is the development of techniques to preserve privacy across multimodal datasets, while also providing sufficient information for analysis and scheduling. The outcome of the project will be a deployment-ready software system that can be used to design and operate a micro-transit service effectively. Collaborators Include - Nashville Wego, Chattanooga Area Regional Transportation Authority and a multi-university team that includes Penn State, Cornell, niversity of Tennessee and Chattanooga and University of Washington.

Optimizing Vehicle Charging and Discharging for Reducing Building Grid Dependency.

Our team is working with Nissan on designing online decision policies to optimize the charging and discharging rates of electric vehicles connected to buildings with the goal of reducing the overall price of electricity purchased from the main power grid. The system once complete will respond to dynamic changes in utility pricing signals and will ensure that all vehicles that are part of the deployment experiments will meet their requirements of expected charge level needed for completing their trips.

Mechanisms for Outsourcing Computation to Edge Resources

Developing an edge cloud is one of the big concerns for cyber-physical systems because latency to the cloud is a big issue. Under DOE and ARPA-E funding we have been developing a middleware that can support edge cloud called RIAPS. It is built upon a lot of our prior work in the area of componentized software frameworks for real-time systems and provides solutions for security, fault isolation, fault recovery, device abstractions, time synchronization and correct-by construction design. Recently, we have been extending this work to create a computational outsourcing market called MODICUM for edge systems. It is a decentralized system for outsourcing computation. MODICUM deters participants from misbehaving, which is a key problem in decentralized systems, by resolving disputes via dedicated mediators and by imposing enforceable fines. However, unlike other decentralized outsourcing solutions, MODICUM minimizes computational overhead since it does not require global trust in mediation results.

Generative Anomaly Detection and Prognostics

Anomaly detection, prognostication and automated mitigation are very critical for data center management. Most of these approaches can be divided into two categories - model-based and data-driven. While model-based techniques rely on physics guided models that can explain and predict the expected progression of parameters such as temperature and voltage in electronics, the data-driven approach is suitable for complex scenarios where a suitable physics based model is unavailable. The data-driven approaches can be further divided into supervised and unsupervised methods. While supervised methods rely on prior labels, unsupervised methods are more suited for cases where the prior failure data might be unavailable or where the failure trends change over time. This is precisely the problem that exists in modern data-centers where the network devices and models change over time and the prior labels are absent. The precise problem therefore is to develop unique anomaly scoring functions that can identify whether the various components in a complex device are failing or not. Note that unlike the prior art it is crucial to not only identify anomalies, but identify the sub-components at the silicon level which are failing in the devices.

Microgrid Control-Coordination Co-Design (MicroC3)

Microgrids (MG) deliver highly resilient power supply to local loads in the event of a power outage, while improving distribution system reliability by reducing the load on the system under stress conditions. Today’s microgrids are typically one-off configurations that are rarely optimized for the specific microgrid architecture, equipment, physical or economic environment, and they are often proprietary, closed systems, leading to vendor-lock-in, and brittle, unmodifiable implementations. The goal of this project is to develop and demonstrate a Microgrid Control/Coordination Co-design (MicroC3) toolsuite that systematically designs an optimized microgrid, given a set of design objectives and performance constraints. The MicroC3 toolsuite will consist of two design-time tools - co-design optimization and validation; and a run-time tool. The co-design optimization tool identifies a selection of low-cost, right-sized equipment (i.e. plant) and optimized local and system-level controls, to meet design constraints and objectives. The validation tool verifies the design tool outputs in high-fidelity simulations and generates the implementation, including code and configurations for control, communications, and coordination. The run time tool is a library of microgrid control algorithms running on ARPA-E funded open-source platform that is automatically customized by the design and validation tools and deployed on low cost but robust and cyber-secure hardware devices. The impact of the project is (1) a framework for identifying lowest-cost, non-trivial MG design variations that deliver predictable and superior performance; and (2) drastically simplified deployment of microgrids at significantly lower cost through automated implementation of validated control and communication architecture into the operational environment.Investigators Prof. Gabor Karsai (Co-PI), Prof. Abhishek Dubey (Co-PI) and Prof. Srdjan Lukic (PI).

Integrated Microgrid Control Platform

Dynamic formation of networked microgrids for heterogeneous components is not a solved problem. System integrators must often put together a microgrid from available components that communicate different information, at different rates, using different protocols. Due to variations in the microgrid architectures and their generation and load mix, each microgrid solution is customized and site-specific. Building on the Resilient Information Architecture Platform for Smart Grid, the goal of this project is to demonstrate a technology for microgrid integration and control based on distributed computing techniques, advanced software engineering methods, and state-of-the-art control algorithms that provides a scalable and reusable solution yielding a highly configurable Integrated Microgrid Control Platform (IMCP) . Our solution addresses the heterogeneity problem by encapsulating the specific details of protocols into reusable device software components with common interfaces, and the dynamic grid management and reconfiguration problem with advanced distributed algorithms that form the foundation for a decentralized and expandable microgrid controller. Investigators Prof. Gabor Karsai (PI), Prof. Abhishek Dubey (Co-PI) and Prof. Srdjan Lukic (Co-PI).

AI-Engine for Adaptive Sensor Fusion For Traffic Monitoring Systems

This project will enable a new suite of learning-based AI-engine that can be integrated and deployed as an edge computing solution to interface with both integrated and non-intrusive sensors (cameras and lidars) deployed in a region. The AI engine will dynamically calibrate the sensor fusion and incident detection algorithms to provide a continuous stream of traffic analytics - vehicle classification, vehicle count, vehicle density estimation, early incident detection, and incident clearance times. Overall, the goals of this proposed project are three-fold. First, we want to develop a technique that can provide sensor fusion at scale and identify outliers. Second, we want to show that the system can dynamically adapt and reconfigure to changing situations across space and time on the highways (information about potential sensitiveness of traffic operations in the regions can be derived through prior work done by this team on incident predictive CRASH analytics). Third, we want to design a low-cost hardware design that can be used to deploy these sensor algorithms at scale through the region and can even integrate the upcoming low-cost lidars and low-resolution cameras within a box that integrates single computing boards, power supplies, weather-proof enclosures and graphical processing units.

Augmenting and Advancing Cognitive Performance of Control Room Operators for Power Grid Resiliency

The goal of the project is to investigate the mechanisms required to integrate recent advances from cognitive neuroscience, artificial intelligence, machine learning, data science, cybersecurity, and power engineering to augment power grid operators for better performance. Two key parameters influencing human performance from the dynamic attentional control (DAC) framework are working memory (WM) capacity, the ability to maintain information in the focus of attention, and cognitive flexibility (CF), the ability to use feedback to redirect decision making given fast changing system scenarios. We are building a new set of algorithms for data-driven event detection, anomaly flag processing, root cause analysis and decision support using Tree Augmented naive Bayesian Net (TAN) structure, Minimum Weighted Spanning Tree (MWST) using the Mutual Information (MI) metric, and unsupervised learning improved for online learning and decision making.

DataScience and STEM

This project aims to help the incorporation of data science concepts and skill development in undergraduate courses in biology, computer science, engineering, and environmental science. Through a collaboration between Virginia Tech, Vanderbilt University, and North Carolina Agricultural and Technical State University, we are developing interdisciplinary learning modules based on high frequency, real-time data from water and traffic monitoring systems. These topics will facilitate incorporation of real-world data sets to enhance the student learning experience and they are broad enough that they can incorporate other data sets in the future. Such expertise will better prepare students to enter the STEM workforce, especially those STEM professions that focus on smart and connected computing. The project will investigate how and in what ways the modules support student learning of data science.

Past Projects

Addressing Transit Accessibility and Public Health Challenges due to COVID-19

The COVID-19 pandemic has not only disrupted the lives of millions but also created exigent operational and scheduling challenges for public transit agencies. Agencies are struggling to maintain transit accessibility with reduced resources, changing ridership patterns, vehicle capacity constraints due to social distancing, and reduced services due to driver unavailability. A number of transit agencies have also begun to help the local food banks deliver food to shelters, which further strains the available resources if not planned optimally. At the same time, the lack of situational information is creating a challenge for riders who need to understand what seating is available on the vehicles to ensure sufficient distancing. We are designing integrated transit operational optimization algorithms, which will provide proactive scheduling and allocation of vehicles to transit and cargo trips, considering exigent vehicle maintenance requirements (i.e., disinfection). A key component of the research is the design of privacy-preserving camera-based ridership detection methods that can help provide commuters with real-time information on available seats considering social-distancing constraints.

CHARIOT

The CHARIOT (Cyber-pHysical Application aRchItecture with Objective-based reconfiguraTion) project, aims to address the challenges stemming from the need to resolve various challenges within extensible CPS found in smart Cities. CHARIOT is an application architecture that enables design, analysis, deployment, and maintenance of extensible CPS by using a novel design-time modeling tool and run-time computation infrastructure. In addition to physical properties, timing properties and resource requirements, CHARIOT also considers heterogeneity and resilience of these systems. The CHARIOT design environment follows a modular objective decomposition approach for developing and managing the system. Each objective is mapped to one or more data workflows implemented by different software components. This function to component association enables us to assess the impact of individual failures on the system objectives. The runtime architecture of CHARIOT provides a universal cyber-physical component model that allows distributed CPS applications to be constructed using software components and hardware devices without being tied down to any specific platform or middleware. It extends the principles of health management, software fault tolerance and goal based design.

Energy Efficiency of Transit Operations

We are developing models to analyze and optimize the cost of transit operations by focusing on the energy impact of the vehicles. For this purpose, we are developing real-time data sets containing information about engine telemetry, including engine speed, GPS position, fuel usage, and state of charge (electrical vehicles) from all vehicles in addition to traffic congestion, current events in the city, and the braking and acceleration patterns. These high-dimensional datasets allow us to train accurate data-driven predictors using deep neural networks, for energy consumption given various routes and schedules. Having these predictors combined with traffic congestion information obtained from external sources will enable the agencies to identify and mitigate energy efficiency bottlenecks within each specific mode of operation such as electric bus and electric car. To make this possible, the project also developed new distributed computing and machine learning algorithms that can handle data at such a rate and scale.

MIDAS

The goal of the project to develop a new approach to evolutionary software development and deployment that extends the results of model-based software engineering and provides an integrated, end-to-end framework for building software that is focused on growth and adaptation. The envisioned technology is based on the concept of a ‘Model Design Language’ (MDL) that supports the expression of the developer’s objectives (the ‘what’), intentions (the ‘how’), and constraints (the ‘limitations’) related to the software artifacts to be produced. The ‘models’ represented in this language are called the ‘design models’ for the software artifact(s) and they encompass more than what we express today in software models. We consider software development as a continuous process, as in the DevOps paradigm, where the software is undergoing continuous change, improvement, and extension; and our goal is to build the tools to support this. The main idea is that changes in the requirements will result in the designer/developer making changes in the ‘design model’ that will result in changes in the generated artifacts, or changes in the target system, at run-time, as needed.

Resilient Information Architecture Platform for the Smart Grid

The future of the Smart Grid for electrical power depends on computer software that has to be robust, reliable, effective, and secure. This software will continuously grow and evolve, while operating and controlling a complex physical system that modern life and economy depends on. The project aims at engineering and constructing the foundation for such software; a ‘platform’ that provides core services for building effective and powerful apps, not unlike apps on smartphones. The platform is designed by using and advancing state-of-the-art results from electrical, computer, and software engineering, will be documented as an open standard, and will be prototyped as an open source implementation.

Secure and Trustworthy Middleware for Integrated Energy and Mobility in Smart Connected Communities

The rapid evolution of data-driven analytics, Internet of things (IoT) and cyber-physical systems (CPS) are fueling a growing set of Smart and Connected Communities (SCC) applications, including for smart transportation and smart energy. However, the deployment of such technological solutions without proper security mechanisms makes them susceptible to data integrity and privacy attacks, as observed in a large number of recent incidents. The goal of this project is to develop a framework to ensure data privacy, data integrity, and trustworthiness in smart and connected communities. The innovativeness of the project lies in the collaborative effort between team of researchers from US and Japan together. As part of the project the research team is developing privacy-preserving algorithms and models for anomaly detection, trust and reputation scoring used by application providers for data integrity and information assurance. Towards that goal, we are also studing trade-offs between security, privacy, trust levels, resources, and performance using two exemplar applications in smart mobility and smart energy exchange in communities.

Statistical Optimization and Analytics for Community Emergency Management

The goal of this project is to improve emergency response systems using proactive resource management that minimizes time and maximizes the effectiveness of the response. With road accidents accounting for 1.25 million deaths globally and 240 million emergency medical services (EMS) calls in the U.S. each year, there is a critical need for a proactive and effective response to these emergencies. Furthermore, a timely response to these incidents is crucial and life-saving for severe incidents. The process of managing emergencies requires full integration of planning and response data and models and their implementation in a dynamic and uncertain environment to support real-time decisions of dispatching emergency response resources. However, the current state-of-the-art research has mainly focused on advances that target individual aspects of emergency response (e.g., prediction, optimization) when different components of an Emergency Response Management (ERM) system are highly interconnected. Additionally, the current practice of ERM workflow in the U.S. is reactive, resulting in a large variance in response times.

Transactive Energy Systems

Transactive energy systems have emerged as a transformative solution for the problems faced by distribution system operators due to an increase in the use of distributed energy resources and rapid growth in renewable energy generation. In the last five years, we have used this research vertical to drive our research in the area of resilient decentralized CPS and have developed a novel middleware platform called TRANSAX by enabling participants to trade in an energy futures market, which improves efficiency by finding feasible matches for energy trades, reducing the load on the distribution system operator. It provides privacy to participants by anonymizing their trading activity using a distributed mixing service, while also enforcing constraints that limit trading activity based on safety requirements, such as keeping power flow below line capacity.

Selected Videos, Talks and Posters

Fair Design of Public Transportation Lines

The booking problem

Integrated Fixed Line and On Demand Problem

A Decision Support Framework for Grid-Aware Electric Bus Charge Scheduling

Online Approach to Solve the Dynamic Vehicle Routing Problem with Stochastic Trip Requests

Predicting Public Transportation Load

Solvers behind our Microtransit Algorithms

Recent paper on the impact of COVID-19 on transit

Project presentation given at the DoE Annual Merit Review

CHARIOT Demonstration

Incident Analytics and Response Management Dashboard

Deep NN Car with SafetyManager

Data-driven Route Level Energy Prediction for Mixed Fleet

Providing Information to Commuters

Energy Consumption Dashboard

Modular Mobility Application

Transax-Blockchain and Transactive Energy

Multi-Modal Mobility Workshops organized in 2018

Occupancy Analysis Dashboard for CARTA

Poster at TDEC workshop in Knoxville in 2019

Resilient Information Architecture Platform Demonstration

Mobility Application Built by Students in the Smart Cities Class

Modular Computing Platform for Public Transit

Occupancy Analysis Dashboard for WeGo Nashville

The Team

Dr. Abhishek Dubey

Lead

Dr. Abhishek Dubey is an Assistant Professor of Electrical Engineering and Computer Science at Vanderbilt University and a Senior Research Scientist at the Institute for Software-Integrated Systems. Abhishek directs the SCOPE lab (Smart and resilient COmputing for Physical Environment) at the Institute for Software Integrated Systems and is the co-lead of Vanderbilt Initiative for Smart Cities Operation and Research (VISOR). His broad research interest lies in the resilient system design of cyber physical systems. He is specially interested in performance management, online failure detection, isolation and recovery in smart and connected cyber-physical systems, with a focus on transportation networks and smart grid. His key contributions include the development and deployment of resilience decision support systems for Metropolitan Transit Authority in Nashville, a robust incident prediction and dispatch system developed for Nashville Fire Department and a privacy-preserving decentralized system for peer-to-peer energy exchange. His other contributions include middleware for online fault-detection and recovery in software intensive distributed systems and a robust software model for building cyber-physical applications, along with spatial and temporal separation among different system components, which guarantees fault isolation. Recently, this work has been adapted for fault detection and isolation in breaker assemblies in power transmission lines. His work has been funded by the National Science Foundation, NASA, DOE, ARPA-E. AFRL, DARPA, Siemens, Cisco and IBM. Abhishek completed his PhD in Electrical Engineering from Vanderbilt University in 2009 in the area of fault detection and isolation for large computing clusters. He received his M.S. in Electrical Engineering from Vanderbilt University in August 2005 and completed his undergraduate studies in Electrical Engineering from the Indian Institute of Technology, Banaras Hindu University, India in May 2001.

Agrima Khanna

Graduate Research Assistant

Agrima Khanna is a graduate student in the Department of Electrical Engineering and Computer Science at Vanderbilt University and works as a research assistant at the Institute for Software Integrated Systems. She received her bachelor’s degree in computer science from Netaji Subhash University of Technology, New Delhi in May 2023.

Ammar Zulqarnain

Graduate Research Assistant

Ammar Bin Zulqarnain is a graduate student in the Department of Computer Science at Vanderbilt University and works as a research assistant at the Institute for Software Integrated Systems. He received his Bachelor’s degree in Engineering Science and Economics from Vanderbilt University in May 2022.

Dr. Ava Pettet

Visiting Scholar

Ava Pettet is currently a visiting scholar at the Institute for Software Integrated Systems. She received her Ph.D. in computer science at Vanderbilt University in 2022. She completed her undergraduate studies in computer science at Vanderbilt University in May 2016.

Dr. Ayan Mukhopadhyay

Senior Research Scientist

Dr. Ayan Mukhopadhyay is a Senior Research Scientist in the Department of Electrical Engineering and Computer Science at Vanderbilt University, USA. Prior to this, he was a Post-Doctoral Research Fellow at the Stanford Intelligent Systems Lab at Stanford University, USA. He was awarded the 2019 CARS post-doctoral fellowship by the Center of Automotive Research at Stanford (CARS). Before joining Stanford, he completed his Ph.D. at Vanderbilt University’s Computational Economics Research Lab, and his doctoral thesis was nominated for the Victor Lesser Distinguished Dissertation Award 2020. His research interests include multi-agent systems, robust machine learning, and decision-making under uncertainty applied to the intersection of CPS and smart cities. His work has been published in several top-tier AI and CPS conferences like AAMAS, UAI, and ICCPS. His work on creating proactive emergency response pipelines has been covered in the government technology magazine, won the best paper award at ICLR’s AI for Social Good Workshop, and covered in multiple smart city symposiums.

Baiting Luo

Graduate Research Assistant

Baiting Luo is a graduate student in the Department of Electrical Engineering and Computer Science at Vanderbilt University and works as a research assistant at the Institute for Software Integrated Systems. He received his M.S. degree in Computer Engineering from Northwestern University in June 2021 and his Bachelor’s degree in Computer Engineering and Computer Science from Rensselaer Polytechnic Institute in 2019.

David Rogers

Senior Research Engineer

David Rogers is a Senior Research Engineer at the Institute for Software Integrated Systems. He graduated from Georgia Tech with a BS in Electrical Engineering and received his MS in Electrical and Computer Engineering in 2025. Prior to Vanderbilt, David was a civilian Software Engineer for the US Air Force, maintaining and developing mission critical software.

Dr. Fangqi Liu

PostDoc

Fangqi Liu earned her Ph.D. in Networked Systems from the University of California, Irvine, in 2023. She holds bachelor’s and master’s degrees from Jilin University, China. Her research focuses on mobile vehicular ad hoc networks, motion planning, scheduling optimization for mobile vehicles, as well as IoT and CPS applications for emergency monitoring.

Jacob Buckelew

Graduate Research Assistant

Jacob Buckelew is a graduate student in the Department of Electrical Engineering and Computer Science at Vanderbilt University and works as a research assistant at the Institute for Software Integrated Systems. He received his Bachelor’s degree in Computer Science and Mathematics from Rollins College in May 2022.

Dr. Jose Paolo Talusan

Research Scientist

Dr. Jose Paolo Talusan is a Research Scientist in the Department of Computer Science and Computer Engineering at Vanderbilt University. He earned his PhD from the Nara Institute of Science and Technology, Japan in 2020. His research interests include middleware and distributed computing systems, with a focus on smart transportation networks.

Dr. Michael Wilbur

Visiting Scholar

Dr. Michael Wilbur is a Visiting Scholar at the Institute for Software Integrated Systems. He received his Ph.D. in Computer Science from Vanderbilt University in 2023, his M.S. degree in Structural Engineering from Northwestern University in December 2018, and his Bachelor’s degree in Civil Engineering from The University of Notre Dame in 2012.

Nathaniel Keplinger

Graduate Research Assistant

Nathaniel is a Vanderbilt University Computer Science Department graduate student and a research assistant at the Institute for Software Integrated Systems. He received a bachelor’s degree in physics and Chinese language from Trinity University in San Antonio, TX, in May 2021. He later completed an MS in data science at the Colorado School of Mines. His research interests lie at the intersection of online planning, decision-making under uncertainty, and cyber-physical systems.

Rishav Sen

Graduate Research Assistant

Rishav Sen is a graduate student and a Russel G. Hamilton Scholar in the Department of Electrical Engineering and Computer Science at Vanderbilt University. He completed his Undergraduate in Electronics and Communication from the Heritage Institute of Technology in August, 2020 and had been working as a Software Engineer before starting his Graduate studies.

Samir Gupta

Graduate Research Assistant

Samir Gupta is a graduate student in the Department of Electrical Engineering and Computer Science at Vanderbilt University and works as a research assistant in Scope Lab at the Institute for Software Integrated Systems. He received his bachelor’s degree in computer science and engineering from Malaviya National Institute of Technology, Jaipur in May 2021.

Vakul Nath

Graduate Research Assistant

Vakul Nath is a graduate student in the Department of Computer Science at Vanderbilt University and works as a research assistant at the Institute for Software Integrated Systems. He received his Bachelor’s degree in Computer Science from Stetson University in May 2024.

Yunuo Zhang

Graduate Research Assistant

Yunuo Zhang is a graduate student in the Department of Electrical Engineering and Computer Science at Vanderbilt University and works as a research assistant at the Institute for Software Integrated Systems. He received his Bachelor’s degree in Computer Science and Applied Mathematics from Vanderbilt University in December 2021.

Selected Publications

- S. Eisele, T. Eghtesad, K. Campanelli, P. Agrawal, A. Laszka, and A. Dubey, Safe and Private Forward-Trading Platform for Transactive Microgrids, ACM Trans. Cyber-Phys. Syst., vol. 5, no. 1, Jan. 2021.

- C. Hartsell, S. Ramakrishna, A. Dubey, D. Stojcsics, N. Mahadevan, and G. Karsai, ReSonAte: A Runtime Risk Assessment Framework for Autonomous Systems, in 16th International Symposium on Software Engineering for Adaptive and Self-Managing Systems, SEAMS 2021, 2021.

- S. Eisele, T. Eghtesad, N. Troutman, A. Laszka, and A. Dubey, Mechanisms for Outsourcing Computation via a Decentralized Market, in 14TH ACM International Conference on Distributed and Event Based Systems, 2020.

- S. Hasan, A. Dubey, G. Karsai, and X. Koutsoukos, A game-theoretic approach for power systems defense against dynamic cyber-attacks, International Journal of Electrical Power & Energy Systems, vol. 115, 2020.

- G. Pettet, A. Mukhopadhyay, M. Kochenderfer, Y. Vorobeychik, and A. Dubey, On Algorithmic Decision Procedures in Emergency Response Systems in Smart and Connected Communities, in Proceedings of the 19th Conference on Autonomous Agents and MultiAgent Systems, AAMAS 2020, Auckland, New Zealand, 2020.

- S. Ramakrishna, C. Harstell, M. P. Burruss, G. Karsai, and A. Dubey, Dynamic-weighted simplex strategy for learning enabled cyber physical systems, Journal of Systems Architecture, vol. 111, p. 101760, 2020.

- F. Sun, A. Dubey, J. White, and A. Gokhale, Transit-hub: a smart public transportation decision support system with multi-timescale analytical services, Cluster Computing, vol. 22, no. Suppl 1, pp. 2239–2254, Jan. 2019.

- C. Hartsell, N. Mahadevan, S. Ramakrishna, A. Dubey, T. Bapty, T. T. Johnson, X. D. Koutsoukos, J. Sztipanovits, and G. Karsai, CPS Design with Learning-Enabled Components: A Case Study, in Proceedings of the 30th International Workshop on Rapid System Prototyping, RSP 2019, New York, NY, USA, October 17-18, 2019, 2019, pp. 57–63.

- S. Pradhan, A. Dubey, S. Khare, S. Nannapaneni, A. Gokhale, S. Mahadevan, D. C. Schmidt, and M. Lehofer, CHARIOT: Goal-Driven Orchestration Middleware for Resilient IoT Systems, ACM Trans. Cyber-Phys. Syst., vol. 2, no. 3, June 2018.

- Garcı́a-Valls Marisol, A. Dubey, and V. J. Botti, Introducing the new paradigm of Social Dispersed Computing: Applications, Technologies and Challenges, Journal of Systems Architecture - Embedded Systems Design, vol. 91, pp. 83–102, 2018.

- D. Balasubramanian, A. Dubey, W. Otte, T. Levendovszky, A. S. Gokhale, P. S. Kumar, W. Emfinger, and G. Karsai, DREMS ML: A wide spectrum architecture design language for distributed computing platforms, Sci. Comput. Program., vol. 106, pp. 3–29, 2015.

- T. Levendovszky, A. Dubey, W. Otte, D. Balasubramanian, A. Coglio, S. Nyako, W. Emfinger, P. S. Kumar, A. S. Gokhale, and G. Karsai, Distributed Real-Time Managed Systems: A Model-Driven Distributed Secure Information Architecture Platform for Managed Embedded Systems, IEEE Software, vol. 31, no. 2, pp. 62–69, 2014.

- N. Mahadevan, A. Dubey, and G. Karsai, Application of software health management techniques, in 2011 ICSE Symposium on Software Engineering for Adaptive and Self-Managing Systems, SEAMS 2011, Waikiki, Honolulu , HI, USA, May 23-24, 2011, 2011, pp. 1–10.

- N. Roy, A. Dubey, and A. S. Gokhale, Efficient Autoscaling in the Cloud Using Predictive Models for Workload Forecasting, in IEEE International Conference on Cloud Computing, CLOUD 2011, Washington, DC, USA, 4-9 July, 2011, 2011, pp. 500–507.